Sse Sst

The following step-by-step example shows how to calculate each of. To evaluate the contribution of the predictors fair we define the adjustedR2.

Coefficient Of Determination Regression Analysis Coefficient Of Determination Analysis

I wrote these functions in R.

Sse sst. Bedeutungen von SST Das folgende Bild zeigt die am häufigsten verwendeten Bedeutungen von SST. Sie können die Bilddatei im PNG-Format für die Offline-Verwendung herunterladen oder per E-Mail an Ihre Freunde sendenWenn Sie ein Webmaster einer nichtkommerziellen Website sind können Sie das Bild von SST-Definitionen auf Ihrer Website veröffentlichen. What Is The Sse In Statistics.

SSE is the sum of the squares of the individual response y_i minus the expected response hat y_i where the expected response is calculated from the linear regression model fit. The line with the smallest SSE is called the least-squares regression line. SST stands for Total Sum of Squares.

We call this line the line of best fit Using the least. SST SSRSSE that 1 SSR SST SSE SST where SSR SST is the proportion of Total sum of squares that can be explainedpredicted by the predictor X SSE SST is the proportion of Total sum of squares that caused by the random effect. How do you get an SSR.

Latest updates and statistic charts. I use the terms SSTR and SSE just to build similarity to the ANOVA output we covered in Chapter 13 Anova. The equation in the title is often written as.

For each x-value in the sample compute the fitted value or predicted value. Sum_i1n y_i-bar y2sum_i1n. Intuitively speaking with the SST unchanged if the SSB is very big the SSE tend to be.

SST Sum of Squares Total SSE Sum of Squared Errors and SSR Regression Sum of Squares. With that logic 1 - SSE SSR. Sum of Squares Total 写作 表示变量 相对于中心 的异动.

Daftar Informasi yang Wajib Disediakan dan Diinformasikan Secara Berkala. Step 1 is to take the difference between Actual values and Baseline values of the dependent variables.

SST SSE AND SSR. Sse is a network performance function. Perf sse nettyewNameValue has two optional function parameters that set the regularization of the errors and the normalizations of the outputs and targets.

Error is the difference between actual and predicted values. A good model should have large R2 SSR SST 1 SSE SST R2 is called Rsquareorcoefficient of determination. So SSE in this case.

SST total sum of squares. SSR Σ ŷi y2. Note that with R2 and SST one can calculate SSR R2SST and SSE 1 R2SST Example.

The sum of SSE and SSB happens to equal to the sum of deviation of all the individual observations SST. Sse is a network performance function. R2.

Root Mean Square Error Standardabweichung. R2 a 1 SSE np1 SST n1. Sum Square Regression Summe der Fehlerquadrate der genutzten Punkte zur Regression.

SST is same as Sum of Squares Total. Is a higher or lower SSE better. Beginalign textSST_textotal colorredtextSSE_textxplainedcolorblue.

From these Wikipedia definitions. Sum Square Total Summe aller Fehlerquadrate. In the usual ANOVA notation.

SSE Σ ŷi yi2. Sum of Squares Error 写作 表示变量 相对于估计值 的异动. SSE residual sum of squares.

Sum of Square Error Summe der kleinesten Fehlerquadrate. The ANOVA compares the within group variance SSEsum of squares within group and between group variance SSB. For example an R-square value of 08234 means that the fit explains 8234 of the total variation in the data about the average.

Sum of Squares Regression写作 表示估计值 相对于中心 的异动. 2 22 2 52 8 52 0 9 9 18.

SSE is same as Sum of Squares for Residuals ie. As a general rule in statistics data residuals RSS also known as residual sum of squares SSR or residual sum of errors SSE are equal to the residual values of those values based on actual empirical data. SSE is the sum of squares due to error and SST is the total sum of squares.

SSE SST which is theproportion of variation in the response that can be explained by the regression model or that can be explained by the predictors X1Xp linearly 0 R2 1 with more predictor variables SSE is smaller and R2 is larger. Most people refer to it as the proportion of variation explained by the model but sometimes it is called the proportion of variance explained. Total sum of squares.

It measures performance according to the sum of squared errors. Sum of Squares Error SSE The sum of squared differences between predicted data points ŷi and observed data points yi. SSTR is same as Sum of Squares for Regression.

SSE stands for Sum of Squared errors. Ozone data we saw r 8874 so R2 78875 of the variation in y is explained by the regression with SST 101475 we can get SSR R2SST 78875101475 800384 6. R 2 1 - SSE SST.

How do you find R Squared from SSR and SSE. Sum of squares between groups. So when we subtract the expected response and subject it to the constraint that the first partials 0 which give two equations that leaves n-2 degrees of freedom for the values of y_i in order to.

SSR regression sum of squares.

Simple Linier Regression Data Science Learning Regression Machine Learning

Simple Linier Regression Regression Linear Regression Sum Of Squares

Linear Regression Linear Regression Regression Linear Relationships

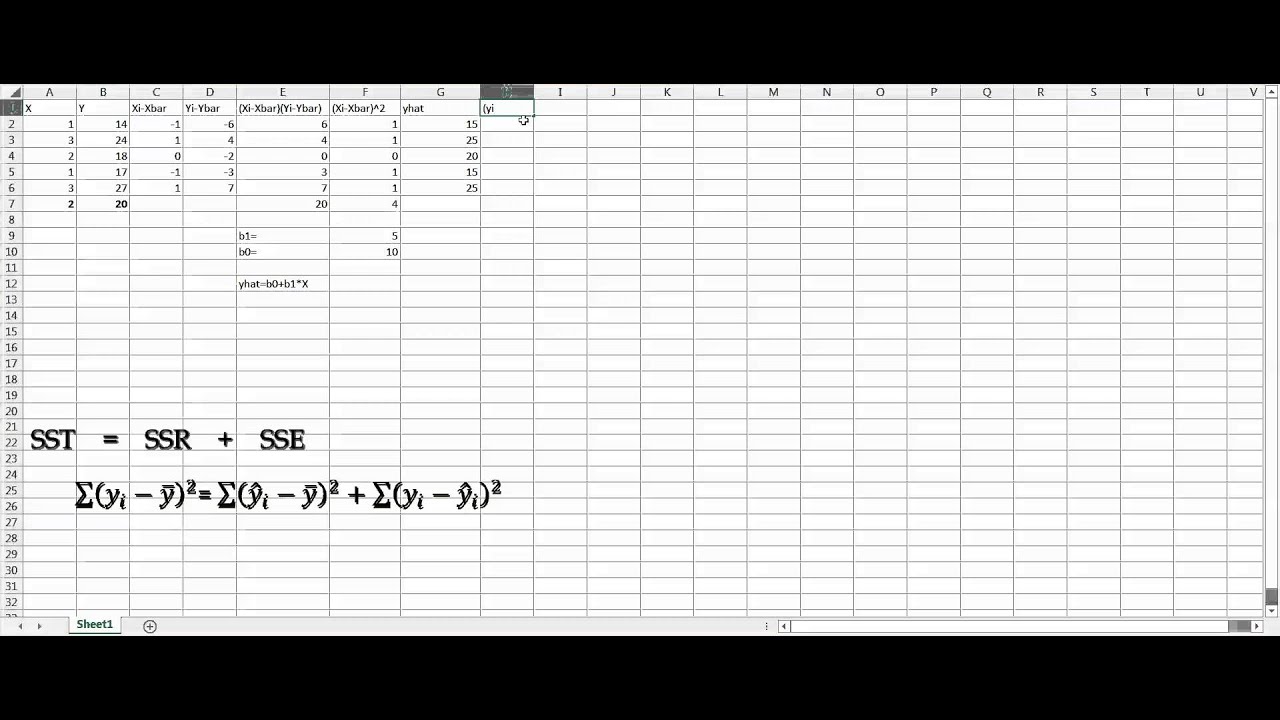

How To Use Excel To Calculate Sse Sst Ssr For Simple Linear Regression Model Youtube Linear Regression Regression Excel

How To Use Excel To Calculate Sse Sst Ssr For Simple Linear Regression Model Youtube Linear Regression Regression Excel

Tj A Number Or Symbol Multiplied With A Variable Or An Unknown Quantity In An Algebraic Term For Example 4 Is The Probability Chemical Property Observation

Posting Komentar untuk "Sse Sst"